当前位置:首页 > 合规类 > IAPP > 考试资讯 > 项目动态 >

资讯分享 | Legal And Ethical Risks In Artificial Intelligence (“AI”)

2023-06-12 09:29:10

浏览量:132

![]() 关注我们,学习更多隐私合规讯息

关注我们,学习更多隐私合规讯息![]()

Corporations and governments around the globe have increasingly applied the technology of artificial intelligence (“AI”) to increase the speed of legal research, to make the streets safer, shopping more targeted and healthcare more accurate. On one hand, AI has become our common technological tool that helps us navigate our personal and professional lives with greater ease, flexibility, and convenience. On the other hand, AI may be misused or processed unpredictably, thus causing potentially harmful consequences. Such questions about the role of the law, ethics and technology in governing AI systems are thus more relevant than ever before. One should not underestimate possible legal and ethical risks behind our use of AI.

全球企业和政府越来越多应用人工智慧(AI)技术来提高法律研究的速度、令街道更安全、购物更有针对性、医疗保健更精准。一方面,AI已成为常用的科技工具,令个人和专业生活变得更轻松、更灵活、更方便。但另一方面,AI可能会被误用或不可预测地处理,造成不良的后果。因此,法律、道德和科技在管理AI系统的作用,变得前所未有地重要。我们不应低估使用AI背后可能存在的法律和伦理道德风险。

Professor John McCarthy, co-founder of AI defined it as ‘…the science and engineering of making intelligent machines, especially intelligent computer programmes. The recent Deloitte Insight research defines AI “as a field of computer science that includes machine learning, natural language processing, speech processing, robotics, and machine vision. Data and the rule-based algorithms are processed to identify patterns, which would give computers the ability to exhibit human-like cognitive abilities.”

AI的联合创始人John McCarthy教授将AI定义为“……制造智慧机器的科学和工程,尤其是智慧电脑程式”。最近Deloitte Insight研究将AI定义为“电脑科学领域,包括机器学习、自然语言处理、语音处理、机器人技术和机器视觉。处理数据和基于规则的演算法以识别模式,使电脑能够表现出类似人类的认知能力。”

AI and automation are responsible for a growing number of decisions by public authorities and the courts. AI is used daily in a number of lawyers’ works such as contract reviews, predictive coding (e-discovery), litigation analyses, due diligence, legal research, e-biling and automating intellectual property etc. AI has affected the overall legal practice and administration of law. As a result of daily AI application in the US legal market, about 100,000 legal-related jobs can be automated and cut by 2036. Indeed, AI provides legal and compliance professionals with the means needed to remain competitive in cross-border practice. It magnifies our leverage and increases the importance of our expertise, professional judgment, and innovation.

AI和自动化对政府当局和法院的决策影响越来越深。许多律师每天在工作中都使用AI,例如合同审查、预测编码(e-discovery)、诉讼分析、尽职调查、法律研究、电子账务和自动化知识产权等议题。AI已影响整体法律执业和法律施行。AI在美国法律服务市场被广泛应用;到了2036年,预计有大约100,000个法律相关的职位会因AI自动化而被裁减。事实上,AI令法律和合规专业人士在跨境执业服务中保持竞争力。它放大了我们的杠杆作用,加强专业知识、专业判断和创新的重要性应用。

Technical Pitfalls of Using AI and Costly Lessons Learned

使用AI的技术陷阱和代价高昂的教训

But in reality, current AI systems are still not free from loopholes and defects. They are constituted by a range of methodologies and are deployed in an array of complex digital applications and technological challenges unknown to the public. AI systems would be subject to hacking, spamming, viruses, software malfunction, and other computer vulnerabilities and technical glitches or maintenance failure. In 2018, a Japanese man Mr. Akihiko Kondo made news headlines because he ‘married’ a hologram of the virtual pop AI star Hatsune Miku. But his AI’s marriage was “short-lived” because an extremely costly maintenance service to summon that AI character was terminated in 2020.

事实上,目前的AI系统仍然存在技术漏洞和缺陷,漏洞和缺陷存在于复杂的数字应用程式及公众未知的科技挑战之中。AI系统可能会受到黑客攻击、垃圾邮件、病毒、软件故障以及其他电脑漏洞和技术故障或维护故障的影响。2018年,日本男子近藤显彦与虚拟AI偶像”初音”登记和AI偶像结婚,登上新闻头条。但他的AI婚姻很“短暂”,由于维修保养AI偶像服务极其昂贵,该虚拟AI角色服务于2020年被终止。

Through social media, disinformation campaigns powered by AI softwares – such as troll bots and deepfakes (e.g., altered video clips on politicians and celebrities in the United States) have adversely threatened our societies’ access to accurate information and have disrupted voters in elections, and eroded social cohesion among different jurisdictions. Misuse of AI can encourage fraudulent activities in the corporate sector and allow for the inciting of violence and social unrests for political gains. With the widespread use of deepfake content, common problems such as manipulation of the public, attacks on personal rights, violations of rights of intellectual property and personal data protection need to be addressed with an efficient remedy.

因为AI软件驱动的社交媒体也生产据称虚假资讯,例如troll bots巨魔机器人和deepfakes深度伪造(例如有关美国政客和名人声称的造假视频),对社会获取准确信息构成了威胁; 在社区选举中扰乱了选民,并且侵蚀了不同地方的社会凝聚力。滥用AI也会鼓励企业进行欺诈活动,鼓励人们煽动暴力和制造社会动荡以谋取政治利益。随着deepfake内容被广泛使用,操纵公众、攻击人身权利、侵犯知识产权和个人资料等问题应运而生,必须通过有效的补救措施来解决。

Bias Management in AI

AI的偏差管理

AI/Machine Learning (ML) related threats originate in the design of the algorithms and the training/ development of the Apps. Any algorithm is just as smart as the data fed to it by the trainers and coloured by its manufacturers/ designers’ past experience and sense of morality. There are mainly ethical concerns in AI upon:

bias (built in the algorithm) management

inputs or history on which AI relies

fairness of outcome that AI delivers or intend to achieve

AI/机器学习(ML)的相关威胁源于演算法设计和应用程式的训练/开发。任何演算法均以供给的数据为基础,并受制造商/设计师过去的经验和伦理道德观所影响。AI主要存在以下道德伦理问题:

(演算法内置的)偏差管理

AI研究所根据的输入或历史

AI研究提供或打算实现的结果的公平性

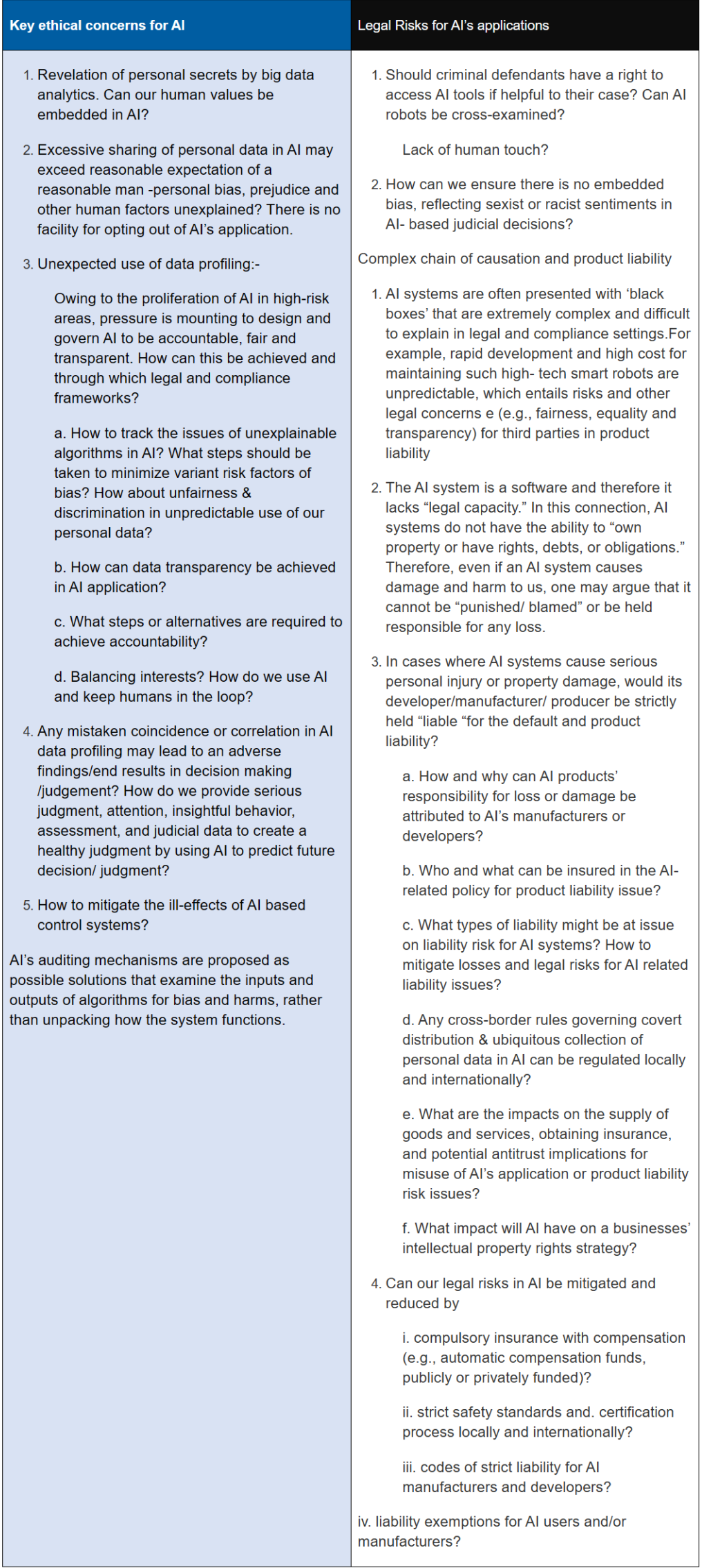

AI technologies are also subject to bias and pitfalls. Academics and regulators alike have been working hard to debate with research journals, articles, principles, regulatory measures and technical standards on AI governance. Let us be alert of possible wrongdoers or criminals intentionally developing or deploying AI for dangerous or malicious purposes with the following ethical and legal concerns:

AI技术研究也受到偏见和陷阱的影响。学术界和监管机构一直努力与研究使用标准化行业原则、监管措施和AI治理技术标准进行辩论。我们必须警惕不法分子或罪犯切勿故意开发或部署AI研究用于危险或恶意目的,导致出现以下伦理道德和法律问题:

Diagram 1: Comparison of ethical and legal concerns for AI ‘s governance

图 1:AI研究督治的伦理道德和法律问题比较

AI的主要伦理道德问题

1. 大数据分析揭露个人隐私秘密。我们人类的价值观可以嵌入AI督治吗?

2.在AI服务中过度分享个人资料,可能超出合理预期的个人偏袒、偏见和其他无法解释的人为因素?个人没有退出AI应用程式的功能选项。

3.意外使用数据剖析:

由于AI在高风险领域扩散越多应用,因此AI设计和管理AI的风险压力越来越大; 以使AI越多应用, AI如此解决问责、公平和数据透明化问题。如何实现这些AI研究督治目标?通过哪些法律和合规框架督治?

a. 如何追踪AI应用中无法解释的演算法问题?应采取哪些步骤来尽量减少偏倚的变异风险因素?如何解决不可预测地使用AI个人数据时的不公平和歧视应用问题?

b. AI应用中如何实现数据透明化?

c. 实现问责制需要哪些步骤或替代方案?

d. 平衡持份者的利益?如何使用AI并让人类分享成就参与其中?

4.AI数据分析中任何错误巧合或关联,都可能导致决策/判决中的不利结果/误判?我们如何提供严肃正确的判决、关注、有洞察影响力的行为、评估和司法数据,通过使用AI预测未来的决策/判决来作出影响深远的判决/先例?

5.如何减轻AI控制系统的不良影响?

有人提出,AI的审计机制是可能的解决方案,审计机制检查演算法的输入和输出是否存在偏见和危害,而不是解开系统的运作方式。

AI应用的法律风险

1.如果AI对被告他们有帮助,刑事案件的被告是否有权使用AI工具维权?AI机器人可以被盘问吗?

缺乏人情味?

2.我们如何确保在基于AI研究所根据的输入的司法判决不存在偏见、性别歧视或种族主义情绪?

复杂的连锁因果关系和产品责任问题

1.AI系统通常有“黑盒”,在法律和合规环境中极其複杂且难以公众理解/解释。例如,高科技智慧AI机器人的快速发展和高维修保养维护成本是不可预测的,为AI制造商/厂商在产品责任方面带来了风险和其他法律问题(例如AI产品公平责任、平等和透明化问题)。

2.AI系统可以分类是一个软件,它缺乏“法律能力”。在这方面,AI系统不具备“拥有财产或有权利、债务或义务”的能力。因此,即使AI系统对我们造成损失,它也不能被“惩罚/治罪”或对任何损失负责。

3.如果AI系统造成严重的人身伤害或财产损失,其开发商/制造商/生产商是否会被追究违约和负责AI产品责任?

a. 至于AI产品的损失或损害责任问题, 如何及为什麽可以归咎于AI的制造商或开发商?

b. 谁人可以为AI相关的产品责任问题投保?何为AI产品保障内容?

c. AI还涉及民事和刑事责任方 面系统的责任风险, 可能存在哪些责任?如何减轻AI相关责任问题的损失和法律风险?

d. 任何管理AI分发和收集个人数据的跨境规则,AI都可以在本地和国际法律被严肃监管吗?

e. 涉及滥用AI应用程式或产品责任风险问题对商品和服务的供应、获得保险以及潜在的反垄断相关责任问题有哪些影响?

f. AI将对企业的知识产权战略决策产生什么深远影响?

4. 我们在AI方面的法律风险是否可以通过以下方式减轻和降低风险?

i. 有偿强制保险(例如公共或私人的自动补偿AI相关责任基金)?

ii. 严格的AI相关责任安全标准和本地和国际认证流程?

iii. AI制造商和开发商的严格责任守则?

iv. AI用户及/或制造商的责任豁免?

International communities and corporations have increasingly been delegating complex, risk-intensive processes to AI systems. Examples for AI applications include granting parole, diagnosing patients with critical illness and managing financial transactions and predictive policing etc. All these generate new challenges to establish, for examples, who and what are at fault in any liability arising out of accidents related to the automated vehicles, the limits of current legal frameworks in dealing with ‘big data’s disparate consequences or preventing “algorithmic harms,” social injustice issues related to automation enforcement and social welfare misallocation, or online media/ consumer spending manulipdation. In 2018, Amazon dispensed with a job applicant by an AI tool “favoring men over women.” It upset all the female candidates in such discriminatory selection in H.R recruitment.

国际社会和企业越来越多将复杂、高风险的流程交给AI系统处理。AI应用的例子包括授予假释、诊断重症患者、管理金融交易和预测警务等。所有这些都产生了新的挑战,如自动驾驶汽车事故的责任由谁来负;当前法律框架在处理大数据后果或防止“演算法危害”方面的局限;与自动化执法和社会福利分配不当有关的社会不公问题;或线上媒体/消费者支出操纵。2018年,亚马逊通过“重男轻女”的AI工具排除了一名求职者。人力资源招聘中这种歧视的选拔,令所有女性求职者感到不安。

Some argue that AI can lead to large-scale discrimination, misallocation of services and goods (dominating the use of AI by specific industries, government and companies), and economic displacement (e.g., ethical crisis to the disappearance of jobs due to AI-based automation). As to the use of predictive policing in the United States, the researchers have also discovered that some AI algorithms, which judges may use to determine sentencing, “discriminate against black defendants and districts dominated by black colored residents.” Due to complexity of AI’s matrix, one will find it difficult to assess the AI tool’s potential biases, the quality of its data and the soundness of the algorithm. All these have rendered us difficult to contest the results in AI-driven findings and decisions.

有些人认为,AI可能导致大规模歧视、服务和商品分配不当(主导特定行业、政府和公司对AI服务的使用)和财产错位(例如AI自动化导致工作岗位消失,因而出现道德危机)。至于在美国使用预测警务,研究人员还发现,法官可能用来确定量刑的AI演算法“歧视黑人被告和黑人为主的地区”。由于AI的复杂性,我们很难评估AI工具的潜在偏差、数据质量和演算法的健全性。所有这些都使我们难以对AI驱动的结果和决策提出质疑。

Roles of Legal and Compliance Professionals in AI’s Governance & Compliance

法律和合规专业人士在AI治理和合规方面的作用

Because a large potential for injustice exists for underrepresented and less powerful groups in seeking social service and equality out of AI governance, human inputs will still be needed to conduct manual reviews to ensure algorithmic accountability in AI governance. As a result, our tech-savvy legal and compliance professionals may be the best people to fill in the role of final gatekeepers in e-discovery, safeguarding those AI-related regulatory and compliance issues. They may also serve as a strong advocate for changes in laws to protect AI users & stakeholders from the potential consequences of over-reliance on AI technology as well as to resolve those legal and ethical concerns.

在AI治理下,弱势群体寻求社会服务和公义很可能存在不公平情况,因此仍需要拿AI进行人工审查,以确保AI治理的演算法应用问责。因此,精通科技的法律和合规专业人士,可能是守卫预测编码(e-discovery)的最佳人选,因为最适合由他们来处理与AI相关的监管和合规法律问题。他们还可以倡导改变/完美的AI法律,以保障AI用户和持份者,避开过度依赖AI的潜在后果,并解决相关的法律和伦理道德等问题。

AI has a wide range of influence, potentially capable of modifying the labour structure, impacting laws and social ethics, violating personal privacy and challenging the norms of international relations. Ethical issues surrounding the use of AI in law often share a common theme. As AI becomes increasingly integrated within our legal system, our society should ensure that our core legal values in fairness, transparency and equality are preserved by daily use of AI. Given AI’s broad-based impacts in our daily and social activities, one can say that those pressing questions can only be successfully addressed from multi-disciplinary remedies and international co-operation in AI governance & compliance.

AI应用的影响力广泛,可能会改变劳动结构、影响法律和社会道德、侵犯个人隐私、挑战国际关系规范。在法律中使用AI的伦理道德问题,通常有共同的主题。随着AI应用越趋融入法律体系,AI社会应该确保坚持公平、透明和平等等核心法律价值,做好AI在日常使用中得到相关保障。鉴于AI广泛影响我们日常社会活动,只有经过多专业相互的补救措施和AI治理与合规方面的国际合作,才能成功解决这些迫切的AI应用问题。

In summary, we should remain open-minded addressing AI governance by updating technical standards, ethical principles and professional codes of conducts to move on in the cyber world. While we should remain vigilant to the possible cyber security challenges, let us strengthen all possible preventive measures, enact guidance and minimize all related risks in AI’s governance. Legal and compliance professionals will help to develop AI governance and to unlock massive opportunities to transform and revitalize the field of AI law, thus ensuring that AI development is safer, more reliable and credible in long run.

总而言之,我们应该通过更新的AI技术标准、伦理道德原则和专业行为监管准则,保持开放的态度来解决AI治理问题,以便在网络世界中继续前进。面对可能出现的网络安全挑战。我们在保持警惕的同时,应加强一切可能的预防措施,制订指引,将AI治理中所有相关风险降至最低。法律和合规专业人士将协助发展AI治理,释放大量机会来改造和振兴AI法律领域,从而确保更安全、更可靠、更可信AI的长远发展。

原文链接:

https://www.hk-lawyer.org/content/legal-and-ethical-risks-artificial-intelligence-%E2%80%9Cai%E2%80%9D-0

原文标题:

Legal And Ethical Risks In Artificial Intelligence (“AI”)

原文出处:

香港律师会

好消息好消息

月底课程大促销!

IAPP+USBAR双证促销,买1得2,

名额有限,速速报名!

长按二维码即刻报名!

扫描下方二维码咨询详情

往/期/回/顾

中国顶级金融数据提供商因新规限制离岸访问

深度思考:数据合规法律业务的行业前景如何?

CIPPE+CIPM双证到手!他如何在3个月内完美通过的?

数据合规零基础,仅用三个月456分通过 IAPP CIPP/E!!

千呼万唤始出来:CIPP/E备考教材第三版终于上线啦!

■ DEPTH

想了解IAPP哪个证书适合你?

全套资料长按扫码领取

全套资料试听课在线试讲

-End-

#IAPP国际隐私专业保护认证培训#

扫描下方二维码咨询详情

IAPP认证(国际隐私专业保护认证)

IAPP三大方向(CIPP/CIPT/CIPT)

DASCA认证(美国数据科学委员会认证)

备考经验分享|全球隐私动态|

干货分享|考试资讯

■ DEPTH

想了解IAPP哪个证书适合你?

资格评估可直接扫码

免费评估/赠送一份国际隐私认证学习资料一份

觉得不错你就赞赞我吧!

点分享

点点赞

点在看

戳这,领取IAPP备考资料包

- 上一篇:合规官与律师:有何区别?

- 下一篇:合规新报|大西洋宣言:数据桥、隐私和人工智能